Upcoming Technology in Computer Science

Soft Computing

Abstract

Soft Computing differs from conventional (hard) computing in that, unlike hard computing, it is tolerant of imprecision, uncertainty, partial truth, and approximation. In effect, the role model for soft computing is the human mind. Principal constituents of Soft Computing are Neural Networks, Fuzzy Logic, Evolutionary Computation, Swarm Intelligence and Bayesian Networks.

The successful applications of soft computing suggest that the impact of soft computing will be felt increasingly in coming years. Soft computing is likely to play an important role in science and engineering, but eventually its influence may extend much farther

Soft Computing became a formal Computer Science area of study in the early 1990's.Earlier computational approaches could model and precisely analyze only relatively simple systems. More complex systems arising in biology, medicine, the humanities, management sciences, and similar fields often remained intractable to conventional

mathematical and analytical methods. That said, it should be pointed out that simplicity and complexity of systems are relative, and many conventional mathematical models have been both challenging and very productive. Soft computing deals with imprecision, uncertainty, partial truth, and approximation to achieve tractability, robustness and low solution cost

mathematical and analytical methods. That said, it should be pointed out that simplicity and complexity of systems are relative, and many conventional mathematical models have been both challenging and very productive. Soft computing deals with imprecision, uncertainty, partial truth, and approximation to achieve tractability, robustness and low solution cost

Introduction of Soft Computing

Unlike hard computing schemes, which strive for exactness and full truth, soft computing techniques exploit the given tolerance of imprecision, partial truth, and uncertainty for a particular problem. Another common contrast comes from the observation that inductive reasoning plays a larger role in soft computing than in hard computing. Components of soft computing include: Neural Network, Perceptron, Fuzzy Systems, Baysian Network, Swarm Intelligence and Evolutionary Computation.

The highly parallel processing and layered neuronal morphology with learning abilities of the human cognitive faculty ~the brain~ provides us with a new tool for designing a cognitive machine that can learn and recognize complicated patterns like human faces and Japanese characters. The theory of fuzzy logic, the basis for soft computing, provides mathematical power for the emulation of the higher-order cognitive functions ~the thought and perception processes. A marriage between these evolving disciplines, such as neural computing, genetic algorithms and fuzzy logic, may provide a new class of computing systems ~neural-fuzzy systems ~ for the emulation of higher-order cognitive power

Neural Networks:

Neural Networks, which are simplified models of the biological neuron system, is a massively parallel distributed processing system made up of highly interconnected neural computing elements that have the ability to learn and thereby acquire knowledge and making it available for use. It resembles the brain in two respects:

- Knowledge is acquired by the network through a learning process.

-Interconnection strengths known as synaptic weights are used to store the knowledge

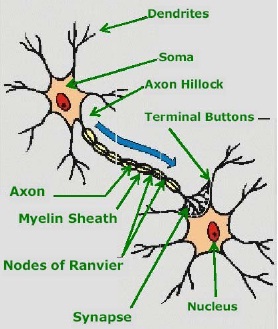

A neuron is composed of nucleus- a cell body known as soma. Attached to the soma are long irregularly shaped filaments called dendrites. The dendrites behave as input channels, all inputs from other neurons arrive through dendrites.

Another link to soma called Axon is electrically active and serves as an output channel. If the cumulative inputs received by the soma raise internal electric potential of the cell known as membrane potential, then the neuron fires by propagating the action potential down the axon to excite or inhibit other neurons. The axon terminates in a specialized contact called synapse that connects the axon with the dendrite links of another neuron

An artificial neuron model bears direct analogy to the actual constituents of biological neuron. This model forms basis of Artificial Neural Networks.

2. Visible Light Communication

Abstract

In Visible Light Communication project, the characteristic of short transient time in turning the light on/off processes was further investigated. A high-speed wireless communication system, which is embedded in our LED lighting system, was built.

The duplex communication system consists of both downlink and uplink media through different frequencies of lights.Several experiments were conducted in the visible light communication system. In this communication system, off-the-self components were taken part in building the driver circuit and the performance of the system was evaluated, such as, data transmission rate, data transmission distance and the field of view of the transmitter.

In Visible Light Communication paper, transmission of MP3 music was demonstrated by using warm-white LED transmitter. Not limit to this, multiple source signals simultaneously in different frequency bands were transmitted through the RGB LED circuitry, and the signals were recovered successfully. This demonstrates the feasibility studies of our design in signals broadcasting

Introduction of Visible Light Communication

The Visible Light Communications Consortium (VLCC) which is mainly comprised of Japanese technology companies was founded in November 2003. It promotes usage of visible light for data transmission through public relations and tries to establish consistent standards. A list of member companies can be found in the appendix. The work done by the VLCC is split up among 4 different committees:

1. Research Advancement and Planning Committee

This committee is concerned with all organizational and administrative tasks such as budget management and supervising di_erent working groups. It also researches questions such as intellectual rights in relation to VLC.

2. Technical Committee

The Techincal Committee is concerned with technological matters such as data transmission via LEDs and uorescent lights.

3. Standardization Committee

The standardization committee is concerned with standardization efforts and proposing new suggestions and additions to existing standards.

4. Popularization Committee

The Popularization Committee aims to raise public awareness for VLC as a promising technology with widespread applications. It also conducts market research for that purpose

Transmitters:

Every kind of light source can theoretically be used as transmitting device for VLC. However, some are better suited than others. For instance, incandescent lights quickly break down when switched on and off frequently. These are thus not recommended as VLC transmitters. More promising alternatives are fluourescent lights and LEDs. VLC transmitters are usually also used for providing illumination of the rooms in which they are used. This makes uorescent lights a particularly popular choice, because they can icker quickly enough to transmit a meaningful amount of data and are already widely used for illumination purposes.

However, with an ever-rising market share of LEDs and further technological improvements such as higher brightness and spectral clarity [Won et al. 2008], LEDs are expected to replace uorescent lights as illumination sources and VLC transmitters. The simplest form of LEDs are those which consist of a bluish to ultraviolet LED surrounded by phosphorus which is then stimulated by the actual LED and emits white light. This leads to data rates up to 40 Mbit/s.

GB LEDs do not rely on phosphorus any more to generate white light. They come with three distinct LEDs (a red, a blue and a green one) which, when lighting up at the same time, emit light that humans perceive as white. Because there is no delay by stimulating phosphorus _rst, Data rates of up to 100 MBit/s can be achieved using RGB LEDs

It should be noted that VLC will probably not be used for massive data transmission. High data rates as the ones referred to above, were reached under meticulous setups which cannot be expected to be reproduced in real-life scenarios. One can expect to see data rates of about 5 kbit/s in average applications, such as location estimation. The distance in which VLC can be expected to be reasonably used ranges up to about 6 meters.

3. VoCable

Abstract

Voice (and fax) service over cable networks is known as cable-based Internet Protocol (IP) telephony. Cable based IP telephony holds the promise of simplified and consolidated communication services provided by a single carrier at a lower cost than consumers currently to pay to separate Internet, television and telephony service providers.

Cable operators have already worked through the technical challenges of providing Internet service and optimizing the existing bandwidth in their cable plants to deliver high speed Internet access. Now, cable operators have turned their efforts to the delivery of integrated Internet and voice service using that same cable spectrum.

Cable based IP telephony falls under the broad umbrella of voice over IP (VoIP), meaning that many of the challenges that telecom carriers facing cable operators are the same challenges that telecom carriers face as they work to deliver voice over ATM (VoATM) and frame-relay networks. However, ATM and frame-relay services are targeted primarily at the enterprise, a decision driven by economics and the need for service providers to recoup their initial investments in a reasonable amount of time. Cable, on the other hand, is targeted primarily at home. Unlike most businesses, the overwhelming majority of homes in the United States is passed by cable, reducing the required up-front infrastructure investment significantly.

Cable is not without competition in the consumer market, for digital subscriber line (xDSL) has emerged as the leading alternative to broadband cable. However, cable operators are well positioned to capitalize on the convergence trend if they are able to overcome the remaining technical hurdles and deliver telephony service that is comparable to the public switched telephone system.

In the case of cable TV, each television signal is given a 6-megahertz (MHz, millions of cycles per second) channel on the cable. The coaxial cable used to carry cable television can carry hundreds of megahertz of signals -- all the channels we could want to watch and more.

In a cable TV system, signals from the various channels are each given a 6-MHz slice of the cable's available bandwidth and then sent down the cable to your house. In some systems, coaxial cable is the only medium used for distributing signals. In other systems, fibre-optic cable goes from the cable company to different neighborhoods or areas. Then the fiber is terminated and the signals move onto coaxial cable for distribution to individual houses.

When a cable company offers Internet access over the cable, Internet information can use the same cables because the cable modem system puts downstream data -- data sent from the Internet to an individual computer -- into a 6-MHz channel. On the cable, the data looks just like a TV channel. So Internet downstream data takes up the same amount of cable space as any single channel of programming. Upstream data -- information sent from an individual back to the Internet -- requires even less of the cable's bandwidth, just 2 MHz, since the assumption is that most people download far more information than they upload.

When a cable company offers Internet access over the cable, Internet information can use the same cables because the cable modem system puts downstream data -- data sent from the Internet to an individual computer -- into a 6-MHz channel. On the cable, the data looks just like a TV channel. So Internet downstream data takes up the same amount of cable space as any single channel of programming. Upstream data -- information sent from an individual back to the Internet -- requires even less of the cable's bandwidth, just 2 MHz, since the assumption is that most people download far more information than they upload.

Putting both upstream and downstream data on the cable television system requires two types of equipment: a cable modem on the customer end and a cable modem termination system (CMTS) at the cable provider's end. Between these two types of equipment, all the computer networking, security and management of Internet access over cable television is put into place.

4. Voice Morphing

Abstract

Voice morphing means the transition of one speech signal into another. Like image morphing, speech morphing aims to preserve the shared characteristics of the starting and final signals, while generating a smooth transition between them.

Speech morphing is analogous to image morphing. In image morphing the in-between images all show one face smoothly changing its shape and texture until it turns into the target face. It is this feature that a speech morph should possess. One speech signal should smoothly change into another, keeping the shared characteristics of the starting and ending signals but smoothly changing the other properties.

The major properties of concern as far as a speech signal is concerned are its pitch and envelope information. These two reside in a convolved form in a speech signal. Hence some efficient method for extracting each of these is necessary. We have adopted an uncomplicated approach namely cepstral analysis to do the same. Pitch and formant information in each signal is extracted using the cepstral approach. Necessary processing to obtain the morphed speech signal include methods like Cross fading of envelope information, Dynamic Time Warping to match the major signal features (pitch) and Signal Re-estimation to convert the morphed speech signal back into the acoustic waveform.

INTROSPECTION OF THE MORPHING PROCESS

Speech morphing can be achieved by transforming the signal's representation from the acoustic waveform obtained by sampling of the analog signal, with which many people are familiar with, to another representation. To prepare the signal for the transformation, it is split into a number of 'frames' - sections of the waveform. The transformation is then applied to each frame of the signal. This provides another way of viewing the signal information. The new representation (said to be in the frequency domain) describes the average energy present at each frequency band.

Further analysis enables two pieces of information to be obtained: pitch information and the overall envelope of the sound. A key element in the morphing is the manipulation of the pitch information. If two signals with different pitches were simply cross-faded it is highly likely that two separate sounds will be heard. This occurs because the signal will have two distinct pitches causing the auditory system to perceive two different objects. A successful morph must exhibit a smoothly changing pitch throughout.

The pitch information of each sound is compared to provide the best match between the two signals' pitches. To do this match, the signals are stretched and compressed so that important sections of each signal match in time. The interpolation of the two sounds can then be performed which creates the intermediate sounds in the morph. The final stage is then to convert the frames back into a normal waveform.

5. Voice Over Internet Protocol

Abstract

Using an ordinary phone for most people is a common daily occurrence as is listening to your favorite CD containing the digitally recorded music. It is only a small extension to these technologies in having your voice transmitted in data packets.

The transmission of voice in the phone network was done originally using an analog signal but this has been replaced in much of the world by digital networks. Although many of our phones are still analog, the network that carries that voice has become digital.

In todays phone networks, the analog voice going into our analog phones is digitized as it enters the phone network. This digitization process, shown in Figure 1 below, records a sample of the loudness (voltage) of the signal at fixed intervals of time. These digital voice samples travel through the network one byte at a time.

At the destination phone line, the byte is put into a device that takes the voltage number and produces that voltage for the destination phone. Since the output signal is the same as the input signal, we can understand what was originally spoken.

The evolution of that technology is to take numbers that represent the voltage and group them together in a data packet similar to the way computers send and receive information to the Internet. Voice over IP is the technology of taking units of sampled speech data .

So at its most basic level, the concept of VoIP is straightforward. The complexity of VoIP comes in the many ways to represent the data, setting up the connection between the initiator of the call and the receiver of the call, and the types of networks that carry the call.

Using data packets to carry voice is not just done using IP packets. Although it won't be discussed, there is also voice over Frame Relay (VoFR) and Voice over ATM (VoATM) technologies. Many of the issues VoIP being discussed also apply to the other packetized voice technologies.

The increasing multimedia contents in Internet have reduced drastically the objections to putting voice on data networks. Basically, the Internet objections to putting voice on data networks. Basically, the Internet Telephony is to transmit multimedia information in discrete packets like voice or video over Internet or any other IP-based Local Area Network (LAN) or Wide Area Network (WAN).

The commercial Voice Over IP (Internet Protocol) was introduced in early 1995 when VocalTec introduced its Internet telephone software. Because the technologies and the market have gradually reached their maturity, many industry leading companies have developed their products for Voice Over IP applications since 1995 VoIP, or "Voice over Internet Protocol" refers to sending voice and fax phone calls over data networks, particularly the Internet. This technology offers cost savings by making more efficient use of the existing network.

Traditionally, voice and data were carried over separate networks optimized to suit the differing characteristics of voice and data traffic. With advances in technology, it is now possible to carry voice and data over the same networks whilst still catering for the different characteristics required by voice and data. Voice-over-Internet-Protocol (VOIP) is an emerging technology that allows telephone calls or faxes to be transported over an IP data network.

6. Voice Portals

Abstract

In its most generic sense a voice portal can be defined as "speech enabled access to Web based information". In other words, a voice portal provides telephone users with a natural language interface to access and retrieve Web content. An Internet browser can provide Web access from a computer but not from a telephone. A voice portal is a way to do that.

Overview

The voice portal market is exploding with enormous opportunities for service providers to grow business and revenues. Voice based internet access uses rapidly advancing speech recognition technology to give users any time, anywhere communication and access-the Human Voice- over an office, wireless, or home phone. Here we would describe the various technology factors that are making voice portal the next big opportunity on the web, as well as the various approaches service providers and developers of voice portal solutions can follow to maximize this exciting new market opportunity.

Natural speech is modality used when communicating with other people. This makes it easier for a user to learn the operation of voice-activate services. As an output modality, speech has several advantages. First, auditory input does not interfere with visual tasks, such as driving a car. Second, it allows for easy incorporation of sound-based media, such as radio broadcasts, music, and voice-mail messages.

Third, advances in TTS (Text To Speech) technology mean text information can be transferred easily to the user. Natural speech also has an advantage as an input modality, allowing for hands-free and eyes-free use. With proper design, voice commands can be created that are easy for a user to remember .These commands do not have to compete for screen space. In addition unlike keyboard-based macros (e.g., ctrl-F7), voice commands can be inherently mnemonic ("call United Airlines"), obviating the necessity for hint cards. Speech can be used to create an interface that is easy to use and requires a minimum of user attention.

For a voice portal to function, one of the most important technology we have to include is a good VUI (Voice User Interface).There has been a great deal of development in the field of interaction between human voice and the system. And there are many other fields they have started to get implemented. Like insurance has turned to interactive voice response (IVR) systems to provide telephonic customer self-service, reduce the load on call-center staff, and cut overall service costs. The promise is certainly there, but how well these systems perform-and, ultimately, whether customers leave the system satisfied or frustrated-depends in large part on the user interface.

Many IVR applications use Touch-Tone interfaces-known as DTMF (dual-tone multi-frequency)-in which customers are limited to making selections from a menu. As transactions become more complex, the effectiveness of DTMF systems decreases.

In fact, IVR and speech recognition consultancy Enterprise Integration Group (EIG) reports that customer utilization rates of available DTMF systems in financial services, where transactions are primarily numeric, are as high as 90 percent; in contrast, customers' use of insurers' DTMF systems is less than 40 percent.

Enter some more acronyms. Automated speech recognition (ASR) is the engine that drives today's voice user interface (VUI) systems. These let customers break the 'menu barrier' and perform more complex transactions over the phone. "In many cases the increase in self-service when moving from DTMF to speech can be dramatic," said EIG president Rex Stringham.

7. Voice Quality

Abstract

Voice quality (VQ) means different things, depending on one's perspective. On the one hand, it is a way of describing and evaluating speech fidelity, intelligibility, and the characteristics of the analog voice signal itself.

On the other, it can describe the performance of the underlying transport mechanisms. However, VQ is defined as the qualitative and quantitative measure of the sound and conversation quality of a telephone call.

As the telephone industry changes-that is, as new technologies and services are added-existing technologies are applied in different ways, and new players become involved. Thus, maintaining the basic quality of a telephone call becomes increasingly complex. Although VQ has evolved over the years to be consistently high and predictable, it is now an important differentiating factor for new voice-over-packet (VoP) networks and equipment. Consequently, measuring VQ in a relatively inexpensive, reliable, and objective way becomes very important.

This tutorial discusses VQ-influencing factors as well as network impairments and their causes in a converged telephony and Internet protocol (IP) network, all from the perspective of the quality of the analog voice signal. Network performance issues will be discussed where appropriate, but the topic of VoP performance with regard to packet delivery is not covered in any real depth.

8. Voice Browser

Abstract

A Voice Browser is a "device which interprets a (voice) markup language and is capable of generating voice output and/or interpreting voice input, and possibly other input/output modalities." The definition of a voice browser, above, is a broad one.

The fact that the system deals with speech is obvious given the first word of the name, but what makes a software system that interacts with the user via speech a "browser"?

The information that the system uses (for either domain data or dialog flow) is dynamic and comes somewhere from the Internet. From an end-user's perspective, the impetus is to provide a service similar to what graphical browsers of HTML and related technologies do today, but on devices that are not equipped with full-browsers or even the screens to support them. This situation is only exacerbated by the fact that much of today's content depends on the ability to run scripting languages and 3rd-party plug-ins to work correctly.

Much of the efforts concentrate on using the telephone as the first voice browsing device. This is not to say that it is the preferred embodiment for a voice browser, only that the number of access devices is huge, and because it is at the opposite end of the graphical-browser continuum, which high lights the requirements that make a speech interface viable. By the first meeting it was clear that this scope-limiting was also needed in order to make progress, given that there are significant challenges in designing a system that uses or integrates with existing content, or that automatically scales to the features of various access devices.

Grammar Representation Requirements

It defines a speech recognition grammar specification language that will be generally useful across a variety of speech platforms used in the context of a dialog and synthesis markup environment."

When the system or application needs to describe to the speech-recognizer what to listen for, one way it can do so is via a format that is both human and machine-readable.

Model Architecture for Voice Browser Systems Representations

"To assist in clarifying the scope of charters of each of the several subgroups of the W3C Voice Browser Working Group, a representative or model architecture for a typical voice browser application has been developed. This architecture illustrates one possible arrangement of the main components of a typical system, and should not be construed as a recommendation."

Natural Language Processing Requirements

It establishes a prioritized list of requirements for natural language processing in a voice browser environment. The data that a voice browser uses to create a dialog can vary from a rigid set of instructions and state transitions, whether declaratively and/or procedurally stated, to a dialog that is created dynamically from information and constraints about the dialog itself. The NLP requirements document describes the requirements of a system that takes the latter approach, using an example paradigm of a set of tasks operating on a frame-based model. Slots in the frame that are optionally filled guide the dialog and provide contextual information used for task-selection.

Speech Synthesis Markup Requirements

It establishes a prioritized list of requirements for speech synthesis markup which any proposed markup language should address. A text-to-speech system, which is usually a stand-alone module that does not actually "understand the meaning" of what is spoken, must rely on hints to produce an utterance that is natural and easy to understand, and moreover, evokes the desired meaning in the listener. In addition to these prosodic elements, the document also describes issues such as multi-lingual capability, pronunciation issues for words not in the lexicon, time-synchronization, and textual items that require special preprocessing before they can be spoken properly.

9. Voice XML

Abstrac

The origins of VoiceXML began in 1995 as an XML-based dialog design language intended to simplify the speech recognition application development process within an AT&T project called Phone Markup Language (PML). As AT&T reorganized, teams at AT&T, Lucent and Motorola continued working on their own PML-like languages.

In 1998, W3C hosted a conference on voice browsers. By this time, AT&T and Lucent had different variants of their original PML, while Motorola had developed VoxML, and IBM was developing its own SpeechML. Many other attendees at the conference were also developing similar languages for dialog design; for example, such as HP's TalkML and PipeBeach's VoiceHTML.

The VoiceXML Forum was then formed by AT&T, IBM, Lucent, and Motorola to pool their efforts. The mission of the VoiceXML Forum was to define a standard dialog design language that developers could use to build conversational applications. They chose XML as the basis for this effort because it was clear to them that this was the direction technology was going.

In 2000, the VoiceXML Forum released VoiceXML 1.0 to the public. Shortly thereafter, VoiceXML 1.0 was submitted to the W3C as the basis for the creation of a new international standard. VoiceXML 2.0 is the result of this work based on input from W3C Member companies, other W3C Working Groups, and the public.

VoiceXML is designed for creating audio dialogs that feature synthesized speech, digitized audio, recognition of spoken and DTMF key input, recording of spoken input, telephony, and mixed initiative conversations. Its major goal is to bring the advantages of Web-based development and content delivery to interactive voice response applications.

Here are two short examples of VoiceXML. The first is the venerable "Hello World":

<?xml version="1.0" encoding="UTF-8"?>

<vxml xmlns="https://www.w3.org/2001/vxml"

xmlns:xsi="https://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="https://www.w3.org/2001/vxml

https://www.w3.org/TR/voicexml20/vxml.xsd"

version="2.0">

<form>

<block>Hello World!</block>

</form>

</vxml>

<vxml xmlns="https://www.w3.org/2001/vxml"

xmlns:xsi="https://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="https://www.w3.org/2001/vxml

https://www.w3.org/TR/voicexml20/vxml.xsd"

version="2.0">

<form>

<block>Hello World!</block>

</form>

</vxml>

The top-level element is <vxml>, which is mainly a container for dialogs. There are two types of dialogs: forms and menus. Forms present information and gather input; menus offer choices of what to do next. This example has a single form, which contains a block that synthesizes and presents "Hello World!" to the user. Since the form does not specify a successor dialog, the conversation ends.

Our second example asks the user for a choice of drink and then submits it to a server script:

<?xml version="1.0" encoding="UTF-8"?>

<vxml xmlns="https://www.w3.org/2001/vxml"

xmlns:xsi="https://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="https://www.w3.org/2001/vxml

https://www.w3.org/TR/voicexml20/vxml.xsd"

version="2.0">

<form>

<field name="drink">

<prompt>Would you like coffee, tea, milk, or nothing?</prompt>

<grammar src="drink.grxml" type="application/srgs+xml"/>

</field>

<block>

<submit next="https://www.drink.example.com/drink2.asp"/>

</block>

</form>

</vxml>

<?xml version="1.0" encoding="UTF-8"?>

<vxml xmlns="https://www.w3.org/2001/vxml"

xmlns:xsi="https://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="https://www.w3.org/2001/vxml

https://www.w3.org/TR/voicexml20/vxml.xsd"

version="2.0">

<form>

<field name="drink">

<prompt>Would you like coffee, tea, milk, or nothing?</prompt>

<grammar src="drink.grxml" type="application/srgs+xml"/>

</field>

<block>

<submit next="https://www.drink.example.com/drink2.asp"/>

</block>

</form>

</vxml>

A field is an input field. The user must provide a value for the field before proceeding to the next element in the form. A sample interaction is:

C (computer): Would you like coffee, tea, milk, or nothing?

H (human): Orange juice.

C: I did not understand what you said. (a platform-specific default message.)

C: Would you like coffee, tea, milk, or nothing?

H: Tea

C: (continues in document drink2.asp)

10. Wardriving

Abstract

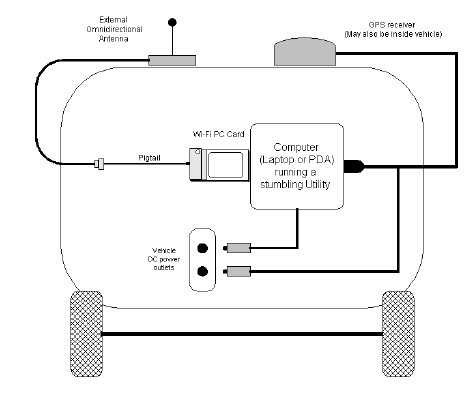

Wardriving is searching for Wi-Fi wireless networks by moving vehicle. It involves using a car or truck and a Wi-Fi-equipped computer, such as a laptop or a PDA, to detect the networks. It was also known as 'WiLDing' (Wireless Lan Driving).

Many wardrivers use GPS devices to measure the location of the network find and log it on a website. For better range, antennas are built or bought, and vary from omnidirectional to highly directional. Software for wardriving is freely available on the Internet, notably, NetStumbler for Windows, Kismet for Linux, and KisMac for Macintosh.

Wardriving was named after wardialing because it also involves searching for computer systems with software that would use a phone modem to dial numbers sequentially and see which ones were connected to a fax machine or computer, or similar device.

Introduction of Wardriving

WarDriving is an activity that is misunderstood by many people.This applies to both the general public, and to the news media that has reported on WarDriving. Because the name "WarDriving'* has an ominous sound to it, many people associate WarDriving with a criminal activity WarDriving originated from wardialing, a technique popularized by a character played by Matthew Broderick in the film WarGames, and named after that film. Wardialing in this context refers to the practice of using a computer to dial many phone numbers in the hopes of finding an active modem.

A WarDriver drives around an area,often after mapping a route out first, to determine all of the wireless access points in that area. Once these access points are discovered, a WarDriver uses a software program or Web site to map the results of his efforts. Based on these results, a statistical analysis is performed. This statistical analysis can be of one drive, one area, or a general overview of all wireless networks. The concept of driving around discovering wireless networks probably began the day after the first wireless access point was deployed.

However,WarDriving became more well-known when the process was automated by Peter Shipley, a computer security consultant in Berkeley, California. During the fall of 2000,Shipley conducted an 18-month survey of wireless networks in Berkeley, California and reported his results at the annual DefCon hacker conference in July of 2001.This presentation, designed to raise awareness of the insecurity of wireless networks that were deployed at that time, laid the groundwork for the "true" WarDriver.

The truth about WarDriving :

The reality of WarDriving is simple. Computer security professionals, hobbyists, and others are generally interested in providing information to the public about security vulnerabilities that are present with "out of the box" configurations of wireless access points. Wireless access points that can be purchased at a local electronics or computer store are not geared toward security. They are designed so that a person with little or no understanding of networking can purchase a wireless access point, and with little or no outside help, set it up and begin using it.

Computers have become a staple of everyday life. Technology that makes using computers easier and more fun needs to be available to everyone. Companies such as Linksys and DLink have been very successful at making these new technologies easy for end users to set upand begin using. To do otherwise would alienate a large part of their target market.

According to the FBI, it is not illegal to scan access points, but once a theft of service,denial of service, or theft of information occurs, then it becomes a federal violation. While this is good, general information, any questions about the legality of a specific act in the United States should be posed directly to either the local FBI field office, a cyber crime attorney, or the U.S. Attorney's office.

This information only applies to the United States. WarDrivers are encouraged to investigate the local laws where they live to ensure that they aren't inadvertently violating the law. Understanding the distinction between "scanning" or identifying wireless access points and actully using the access point is understanding the difference between WarDriving, a legal activity, and theft, an obviously illegal activity.

Amazing Technology

ReplyDeleteThanks

Delete